Source Code: gold-bot

Collaborators: VedanshiShah7, jnahill

I took Northeastern’s CS4100: Artificial Intelligence in Summer 1 2021 and this is my group final project.

This blog post is derived from our final project report.

Introduction

For our final project for CS 4100 Artificial Intelligence at Northeastern University, we created a chess bot that uses monte-carlo tree search to make decisions about the best moves to make to win a chess game. Our project uses both the python-chess library and an open-source project called lichess-bot as a bridge between the Lichess API and our chess engines. We wanted to put our bot into an active chess community and compare its percentile rankings across multiple time formats against the other competitors to measure our bot’s strength. To avoid the ethical dilemma of playing our bot against unknowing human adversaries, we opted to place our bot in the Lichess community bots field (found at lichess.org/player/bots). Here gold-bot played and received an Elo rating, a method for calculating the relative skill levels of players in zero-sum games, against other programmed and voluntarily uploaded chess bots. In order to gain a baseline understanding of the bot’s performance, we first allowed the bot to play rated games while randomly determining what move to play. Then, we implemented our methods, played out 90 rated games, 30 in each time format, and measured the change in performance (Appendix 1).

Methods

The essential goal of gold-bot is to take in a board state and the time left in the game to determine using search which is the best move for gold-bot to make to have the best chance to win the game. Gold-bot uses the monte-carlo method of simulating playouts for a set amount of time and backpropagating the results of those simulations to the parent move. Finally, it determines which of those moves yields the best win rate.

The amount of time gold-bot runs a single pass of the monte-carlo method is proportional to the amount of time gold-bot has left in the game. Therefore, at the beginning of the game, when gold-bot has a lot of time, and the positions usually have the most pieces and moves to consider, gold-bot thinks longer. And when the game is near its end, and generally fewer pieces are left on the board, gold-bot simulates for less time as to not time-out and lose the game.

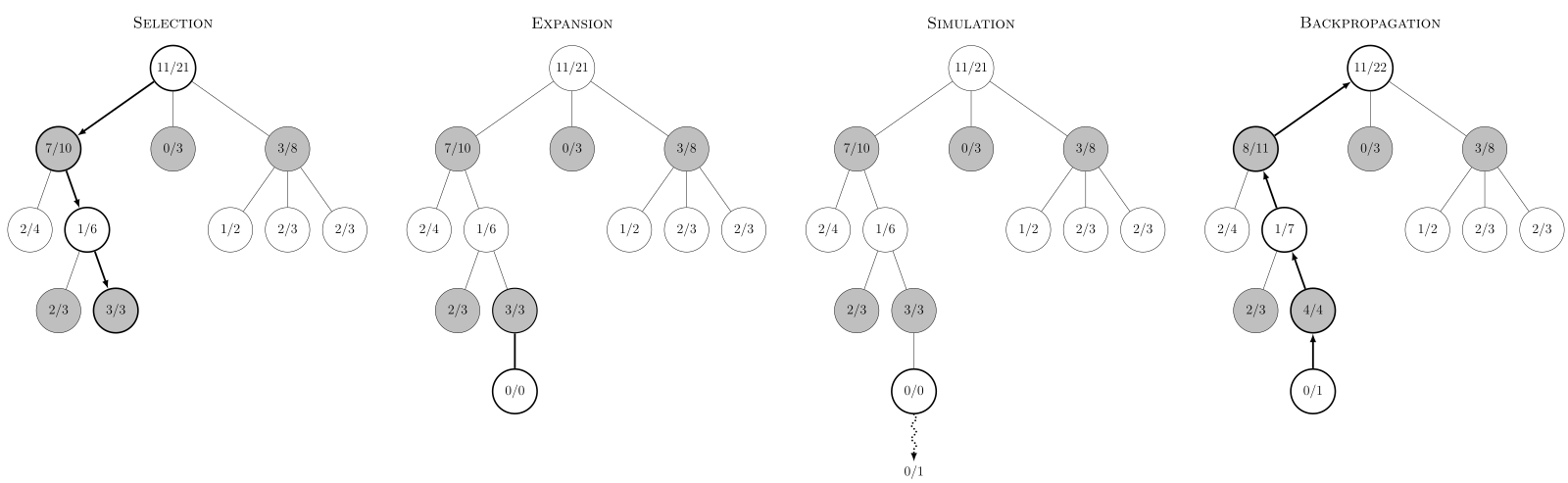

Gold-bot uses the four phases of the monte-carlo tree search method shown in Appendix 4, selection, expansion, simulation, and backpropagation. Selection is performed using the upper confidence bound applied to trees formula, UBC1. Once selection is complete, a random child move is expanded onto the tree. That child is then simulated to 10 moves depth using playouts that result in each side making the move that results in the most immediate material advantage for that respective color. After the simulation and if the game is not over, Pietro Carrera’s “1 3 3 5 9” piece evaluation method is applied to the board state and the result of the winning side by that calculation is backpropagated up to all parent nodes of that child. If the game ends during the simulation, a .5, 1, or 0 win value is backpropagated to all parent nodes of the child for draw, win, and loss respectively. This process of selection, expansion, simulation, and backpropagation is repeated until time to move eventually expires.

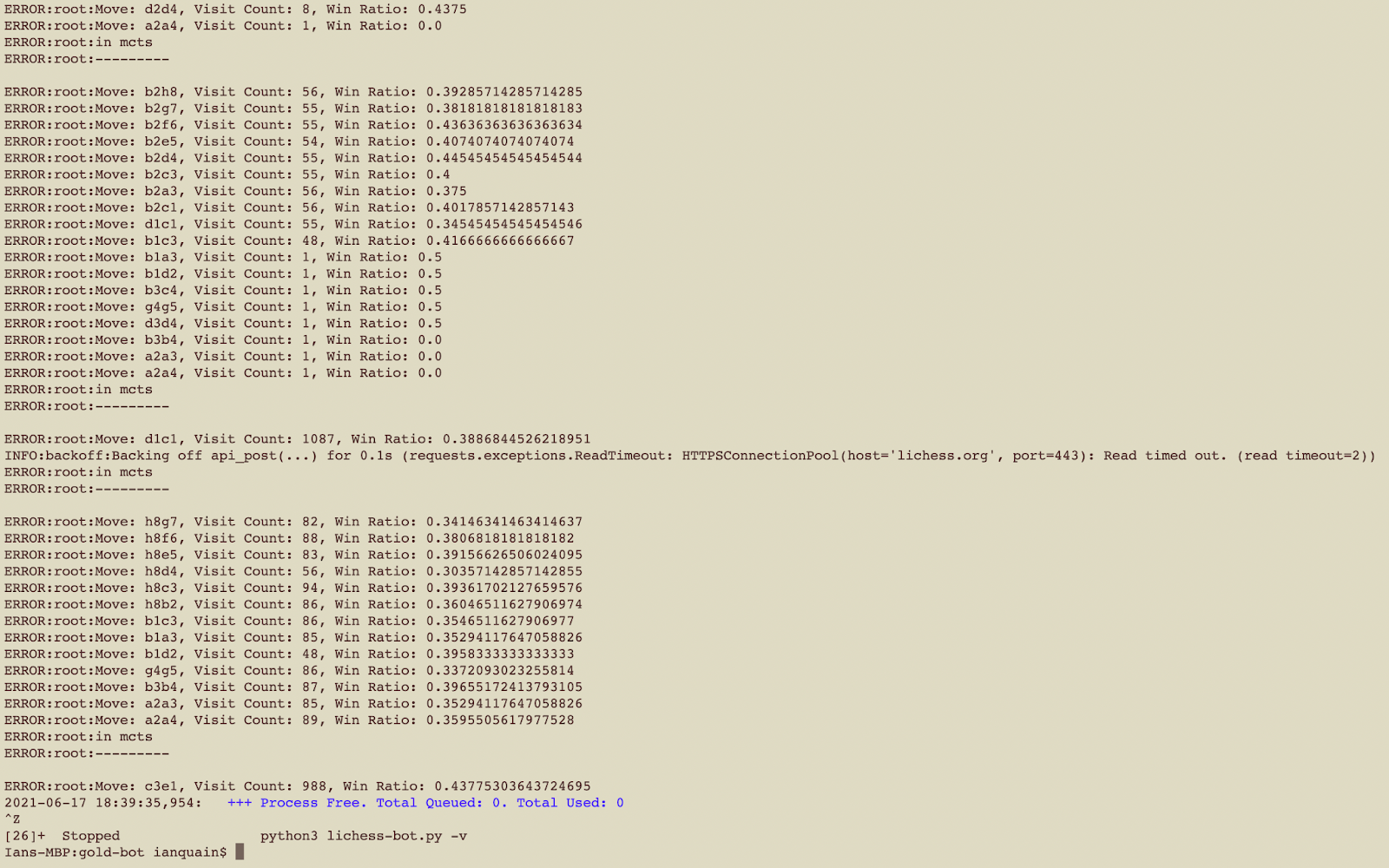

Once gold-bot makes a determination about which move to make by selecting the node that has been visited the most times by our monte-carlo method, it prints to console the result of the search as shown in Appendix 3. The handling of input data and relaying the moves to Lichess is all handled by the open-source starter code, lichess-bot.

At its current implementation, gold-bot accepts challenges from both human and computer players in the time formats of bullet, blitz, and rapid for both unrated and rated games.

Experimental Results

We used game plays against other bots on lichess.org in order to collect data on our bot’s performance. The data in appendix 1 displays the data we collected. We played 3 types of games:

- Bullet: 30 seconds to 2 minutes 59 seconds.

- Blitz: 3 minutes to 7 minutes 59 seconds.

- Rapid: 8 minutes to 24 minutes 59 seconds.

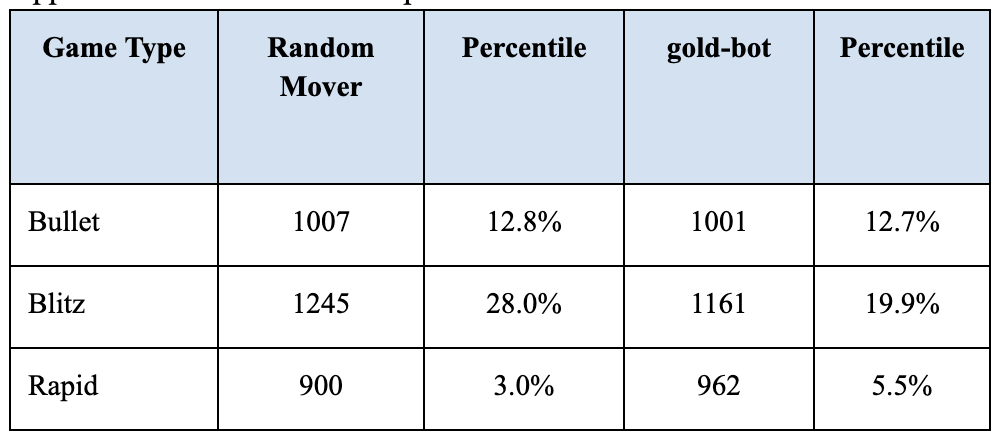

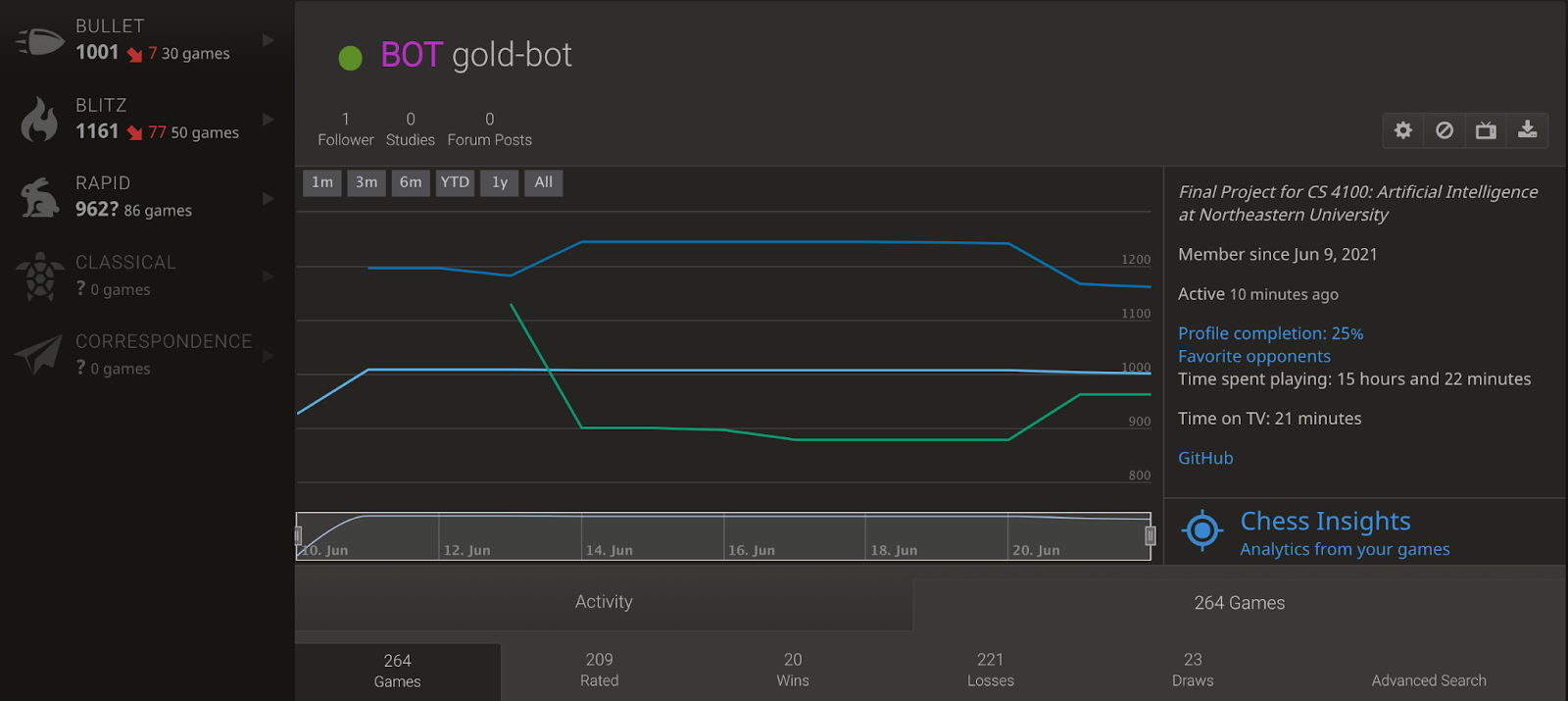

We used the data of the preliminary bot that focused only on playing random moves. Our rating results are displayed in the column labeled before and our bot’s performance is represented as a percentile in the third column. We then played the games with our code’s implementation of moves that used monte-carlo tree search and a heuristic-driven state evaluation function. The ratings after playing with our code are represented in the column named after, and percentiles of our bot’s performance compared to other bots. In total, we played 264 games in order to collect the data that can be found at Appendix 5. Appendix 2 shows our performance progression with our games played.

Retrospective

We learned a lot of lessons during this project. One lesson was that our experimental results might not be totally accurate to how the gold-bot actually performs. This is because there is a finite number of bot opponents that we could play against so the experimental results from the gold-bot’s matches might not be statistically accurate to how the gold-bot would perform across the whole chess community. Also, comparing the gold-bot to the random mover bot is not necessarily a fair comparison because the random mover bot would sometimes win in the faster timeout formats of chess matches. It would sometimes win in the faster time formats because its opponents would time out resulting in a win for the random mover bot.

Another lesson was that although we understood how monte-carlo tree search works in theory from class, actually implementing it to make it work in a real-life situation like our chess bot, is much more difficult. The biggest challenge we found was the scaling factor of chess. The issue is that when we do the simulations, there are too many possibilities of different moves and different turnouts that it is impossible to fully simulate every possible move to the end of the game. The games of chess our bot was playing were timed and our bot did not have the time to simulate every move to the end of the game in the amount of time given in the game. In an optimal scenario, our bot would be able to run simulations much faster to allow for it to evaluate every legal move and make an informed decision in the allotted time of the chess game. To solve this problem, we limited the number of moves ahead that our bot simulated, but it still was not able to simulate all of the possible moves fast enough. We learned that monte-carlo tree search works in theory for complicated problems like playing chess, but in practice, some optimizations must be made to make it work for a successful chess bot. Some optimizations that could be made in the future could include using multithreading, caching the tree after each move so repetitive work is avoided during simulations, and performing some logic during our opponents’ turns to save time during our own turns. Making some of these improvements to the gold-bot would result in better performance.

Appendix

How to install

- Download the repo found at https://github.com/quaini/gold-bot

- NOTE: Only Python 3.7 or later is supported!

- The most recent release, 3.9, can be found at https://www.python.org/downloads/

- Navigate to the directory in cmd/Terminal: cd gold-bot

- If you do not have pip, install it.

- A helpful resource for pip installation: https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/

- Install the requirements using: python3 -m pip install -r requirements.txt

- Run the bot on using: python3 lichess-bot.py -v. Keep the bot running in the console while doing either of the next steps.

If you want to play against gold-bot

- After the bot is running in the terminal/shell, it will appear on the list of online Lichess community bots found here: https://lichess.org/player/bots

- Note you may need to ctrl/cmd F for it.

- Log into your own lichess account (or create one) and click the “play” next to gold-bot’s name.

- Recall that gold-bot only accepts challenges in the bullet, blitz, and rapid time formats. It will play both casual and rated games.

- The game will begin and every result of the monte-carlo method will be outputted to the console as shown in Appendix 3.

Appendix 1: Performance comparison

Appendix 2: Performance progression with games played. June 14th shows initial rating after simulating with a random mover bot. June 21st shows the gold-bot’s final rating after running 30 games in each time format using the methods explained above.

Appendix 3: Sample terminal output on single run of gold-bot’s search. Shows all possible moves to consider, the number of visits MCTS ran through that move, and the moves’s win/loss ratios.

Appendix 4: Steps of Monte Carlo tree search algorithm

Appendix 5: All of the games played during random testing, implementation, and final testing can be found at https://lichess.org/@/gold-bot/all.

Citations

Fiekas, Niklas. “Python-Chess: a Chess Library for Python.” Python-Chess, 2014, python-chess.readthedocs.io/en/latest/.

“Monte Carlo Tree Search.” Wikipedia, Wikimedia Foundation, 8 June 2021, en.wikipedia.org/wiki/Monte_Carlo_tree_search.

ShailChoksi. “ShailChoksi/Lichess-Bot.” GitHub, 18 Dec. 2018, github.com/ShailChoksi/lichess-bot.